Hand on heart: do you know exactly what the browser you are currently using is doing? In addition to your knowledge of software development, it mainly depends on which browser you are currently using. Because: If you use an open source browser such as Mozilla Firefox, you can inspect the source code and check in detail what happens when you call up a website, for example. Closed browsers such as Microsoft Edge or Apple Safari do not offer this option. In fact, the following applies: only with open source programs can you really know what's inside.

Transparency as a security concept

The idea that source code that is accessible to everyone can provide more security seems paradoxical at first: Couldn't attackers also check the code and exploit weaknesses? Yes, they could and do it again and again. But an active developer community can just as quickly ensure that the relevant problems are resolved. Above all, security-relevant tools such as encryption programs benefit from this concept. In so-called security audits , large open source programs are checked for problems - a process that is not possible with closed source programs due to a lack of source code.

If the source code of a program can be viewed, you can always check what exactly it is doing and how it works.

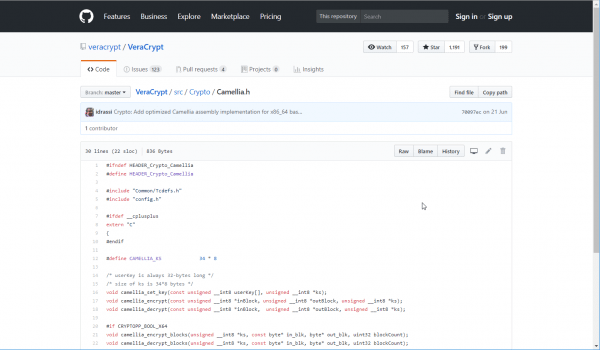

If the source code of a program can be viewed, you can always check what exactly it is doing and how it works. Another potential advantage of the open source concept is that the code can be picked up by other development teams and further developed independently - this is known as a “ fork ”. This is what happened with the TrueCrypt encryption tool, which was discontinued in 2014 . After the original program was no longer developed due to a lack of security, another team continued the program base under the name VeraCrypt (in fact, the development of VeraCrypt started before the end of TC, but it would not be possible without the open source concept ). VeraCrypt also provides an example of a successful audit: any security gaps found in the source code were quickly closed by the developer community..

No security guarantees

Whether open or closed source: there are advocates for both concepts who propagate security. But neither can guarantee perfect security.

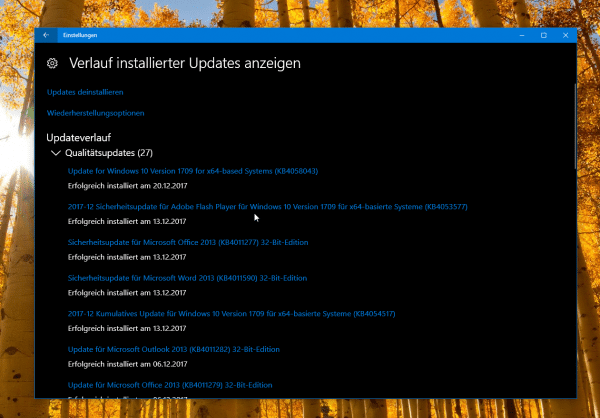

Perhaps the "simplest" example that closed code does not automatically result in more security is provided by Microsoft with its Windows operating system. Month after month, new and often serious security gaps in Windows come to light. Microsoft is responding to this with Patch Tuesday , on which the developers from Redmond distribute patches every month. Windows users have to rely on the Windows makers to do a good job - unlike Linux, for example, passionate developers have no chance of fixing code errors in Windows themselves. Regardless of that, you can never be sure what Windows is doing in the background. Not least because of Windows 10 and its data collection, which is still not completely transparent, this is a major disadvantage of non-open software. The same is of course not a Microsoft-exclusive problem, but can be transferred to any closed source program.

As a user, you have to rely on Microsoft to reliably close the gaps in Windows.

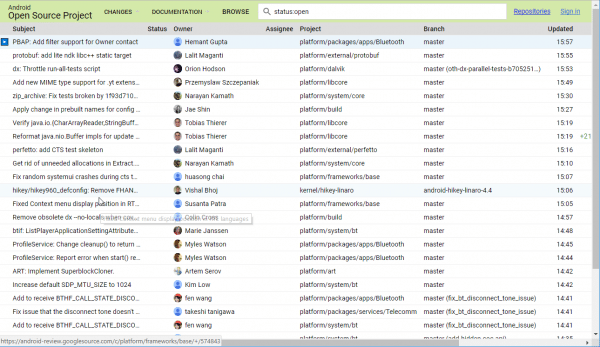

As a user, you have to rely on Microsoft to reliably close the gaps in Windows. Open source systems such as Linux or BSD provide the opposite example. In theory, every developer can close security gaps on their own - of course, the corresponding changes must also be incorporated into the "official" code. Experience has shown that errors and security gaps in the Linux kernel are quickly identified by the developer community Fixed. But that's only half the battle: If the patches don't reach the user, it's of little use. Here, too, a large operating system provides a negative example, namely Android. Millions of smartphones run completely outdated versions of Google's Linux-based mobile system. Not only unpatched Linux vulnerabilities, but also a lack of security concepts from Google are a problem. After all, there is at least in theory the possibility of creating new Android versions via the Android Open Source Project - developer communities such as XDA Devs jump into the breach here with so-called custom ROMs - but the effort involved is great and the results are not always satisfactory. In addition, even with open source code, you cannot be sure that the programs generated from it actually originate from this source code and have not been manipulated in the meantime..

Android is open source, but it doesn't guarantee security.

Android is open source, but it doesn't guarantee security. Mixed calculation

Often large, proprietary programs also integrate open source projects for certain functions. One example of this is the popular smartphone messenger WhatsApp. While WhatsApp's basic code is closed, the end-to-end encryption introduced in 2014 is based on the open source services of Open Whisper Systems . In fact, the message coding used in the alternative messenger signal has so far been considered unbreakable , although the protocols used are openly visible. What exactly WhatsApp, acquired by Facebook in 2014, does beyond message encryption, cannot be found out easily - at least as long as the WhatsApp makers do not reveal the app's code.

The encryption in proprietary WhatsApp is based on open source protocols.

The encryption in proprietary WhatsApp is based on open source protocols.

Whether open source or not: how safely and reliably a program works always depends on who is responsible for the development. A security-relevant program that has not been worked on for years should at least be viewed with skepticism, even if the source code is open. If, on the other hand, the code is properly documented, and many programmers are concerned with further development, the chance of having a safe open source project in front of you is at least high.

Trust issues

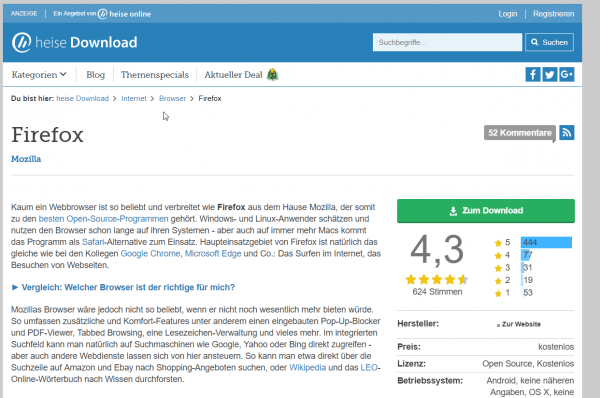

We state : Once the source code has been checked and any security gaps found are quickly plugged by the developer community, active open source projects are absolutely safe. Or? Unfortunately not, at least not without reservation. If you download a “finished” open source program, you have to trust that the clean source code has not been tampered with. An example: If you download the aforementioned Firefox from a possibly dubious source, it is quite possible that someone has integrated malware into the code. After all, the source code must first be converted into a finished program, i.e. compiled with a compiler, before it can be used. Because you cannot easily see the original source code.

Even if it says Open Source, you have to be able to trust the source.

Even if it says Open Source, you have to be able to trust the source. The solution for skeptics could be: compile it yourself . Simply download the proven clean source code, run it through the compiler of your choice and you have a safe open source program. But here, too, there is a “but”: Of course, you also have to trust the compiler. The so-called Ken Thompson hack provides the background . In the 1980s, the computer scientist demonstrated that a corrupted compiler could build a dangerous back door into a program with clean source code - even though nothing of this could be seen in the compiler's source code. Theoretically, for one hundred percent security you would not only have to compile the program, but also write the compiler used for it yourself, of course with a completely "clean" system. These thoughts can be continued at will, even if there are now test concepts against the Ken Thompson hack .

Admittedly, we are slowly moving on a level where the idea of security almost borders on paranoia. In practice, however, it turns out that a Thinking in black and white when dealing with open or closed source software is not the best idea . Open source is neither generally safer, nor is proprietary software nebulous across the board. As is so often the case, the truth lies somewhere in the middle.